In today’s healthcare ecosystem, data is both an operational backbone and a compliance challenge. For organizations managing vast networks of primary care centers, protecting patient data while maintaining efficiency is a constant balancing act. As the healthcare industry becomes increasingly data-driven, the need to ensure security, consistency, and compliance across systems has never been more critical.

Primary care organizations depend on sensitive clinical and claims data sourced from multiple payers. Each source typically arrives in a different format—creating integration hurdles and privacy risks. Manual processing not only slows operations but also increases the chance of human error and non-compliance with data protection mandates such as HIPAA.

To overcome these challenges, one leading healthcare provider partnered with Mage Data, adopting its Test Data Management (TDM) 2.0 solution. The results transformed the organization’s ability to scale securely, protect patient information, and maintain regulatory confidence while delivering high-quality care to its patients.

The organization faced multiple, interrelated data challenges typical of large-scale primary care environments:

- Protecting Patient Privacy: Ensuring HIPAA compliance meant that no sensitive health data could be visible in development or test environments. Traditional anonymization processes were slow and prone to inconsistency.

- Data Consistency Across Systems: Patient identifiers such as names, IDs, and dates needed to remain accurate and consistent across applications and databases to preserve reporting integrity.

- Operational Inefficiency: Teams spent valuable time manually processing payer files in multiple formats, introducing risk and slowing development cycles.

- Scaling with Growth: With over 50 payer file formats and new ones continuously added, the organization struggled to maintain standardization and automation.

These pain points created a clear need for an automated, compliant, and scalable Test Data Management framework.

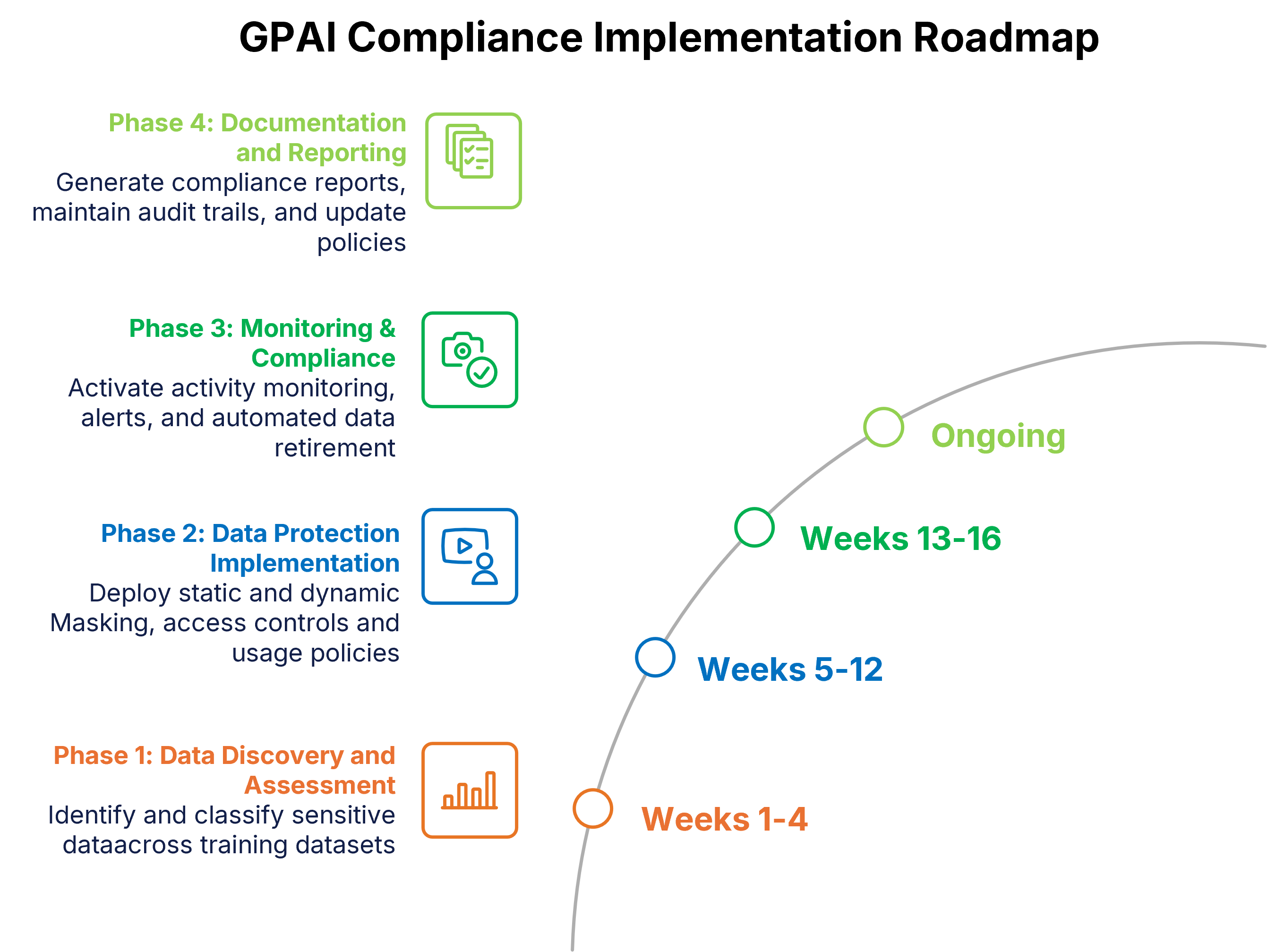

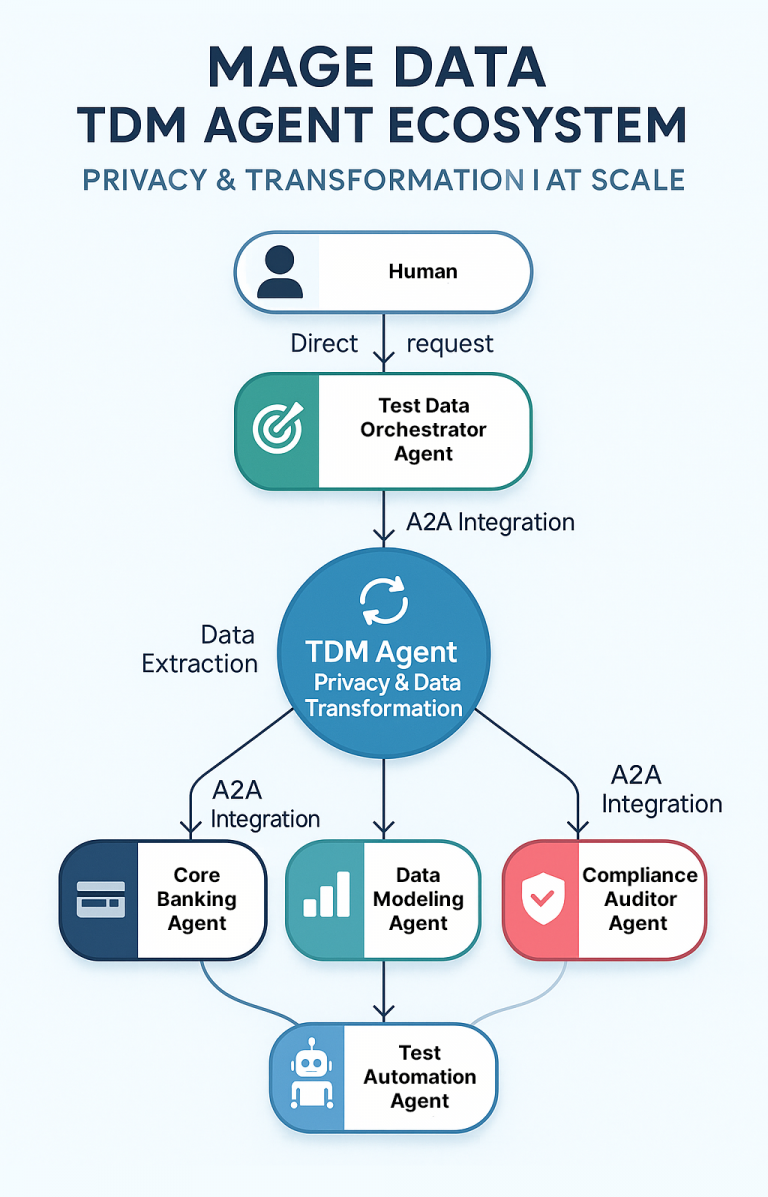

Mage Data implemented its TDM 2.0 solution to address the organization’s end-to-end data management and privacy challenges. The deployment focused on automation, privacy assurance, and operational scalability.

- Automated Anonymization

Mage Data automated the anonymization of all payer files before they entered non-production environments. This ensured that developers and testers never had access to real patient data, while still being able to work with datasets that mirrored production in structure and behavior. The result was full compliance with HIPAA and other healthcare data protection requirements.

- NLP-Based Masking for Unstructured Text

To mitigate the risk of identifiers embedded in free-text fields—such as medical notes or descriptions—Mage Data integrated Natural Language Processing (NLP)-based masking. This advanced capability identified and anonymized hidden personal data, ensuring that no sensitive information was exposed inadvertently.

- Dynamic Templates and Continuous Automation

Mage Data introduced dynamic templates that automatically adapted to new or changing file types from different payers. These templates, combined with continuous automation through scheduled jobs, detected, masked, and routed new files into development systems—quarantining unsupported formats until validated. This approach reduced manual effort, improved accuracy, and allowed the organization to support rapid expansion without re-engineering its data pipelines.

The adoption of Mage Data’s TDM 2.0 delivered measurable improvements across compliance, efficiency, and operational governance:

- Regulatory Compliance Assured: The organization successfully eliminated the risk of HIPAA violations in non-production environments.

- Faster Development Cycles: Developers gained access to compliant, production-like data in hours instead of days—accelerating release cycles and integration efforts.

- Consistency at Scale: Mage Data ensured that identifiers such as patient names, IDs, and dates remained synchronized across systems, maintaining the accuracy of analytics and reports.

- Operational Efficiency: Manual discovery and masking processes were replaced by automated, rule-driven workflows—freeing technical teams to focus on higher-value work.

- Future-Ready Scalability: The solution’s adaptable framework was designed to seamlessly extend to new data formats, applications, and business units as the organization grew nationwide.

Through this transformation, Mage Data enabled the healthcare provider to turn data protection from a compliance burden into a strategic advantage, empowering its teams to innovate faster while safeguarding patient trust.

In conclusion, Mage Data delivers a comprehensive, multi-layered data security framework that protects sensitive information throughout its entire lifecycle. The first step begins with data classification and discovery, enabling organizations to locate and identify sensitive data across environments. This is followed by data cataloging and lineage tracking, offering a clear, traceable view of how sensitive data flows across systems. In non-production environments, Mage Data applies static data masking (SDM) to generate realistic yet de-identified datasets, ensuring safe and effective use for testing and development. In production, a Zero Trust model is enforced through dynamic data masking (DDM), database firewalls, and continuous monitoring—providing real-time access control and proactive threat detection. This layered security approach not only supports regulatory compliance with standards such as GDPR, HIPAA, and PCI-DSS but also minimizes risk while preserving data usability. By integrating these capabilities into a unified platform, Mage Data empowers organizations to safeguard their data with confidence—ensuring privacy, compliance, and long-term operational resilience.